dcoker Stack

docker Stack和docker-compose类似,都是使用yml文件,用于部署多个服务。docker-compose只能把多个服务到同一台机器,而docker stack可以在swarm模式下部署到多台机器、每个服务可以定义多个副本。

如下使用docker-compose.yml定义上节中flask-redis:

1 | version: "3" |

使用docker stack部署:

ubuntu@swarm-manager:~/flask-redis$ docker stack deploy --compose-file docker-compose.yml web

Creating network web_my-network

Creating service web_redis

Creating service web_web

查看服务的状态:

ubuntu@swarm-manager:~/flask-redis$ docker service ls

ID NAME MODE REPLICAS IMAGE

i5d8wcqy684o web_web replicated 3/3 heqingliang/flaskredis_web

prwd381d4700 web_redis global 1/1 redis:latest

查看某个服务的状态:

ubuntu@swarm-manager:~/flask-redis$ docker service ps web_web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

x6wwegl7m7hk web_web.1 heqingliang/flaskredis_web swarm-worker2 Running Running about a minute ago

lrc1rxi9e788 web_web.2 heqingliang/flaskredis_web swarm-worker1 Running Running about a minute ago

zeilj8s901ei web_web.3 heqingliang/flaskredis_web swarm-manager Running Running about a minute ago

ubuntu@swarm-manager:~/flask-redis$ docker service ps web_redis

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

t1y4et6ympxi web_redis.y271lmw7frng7p6btfwic0pfs redis:latest swarm-manager Running Running 2 minutes ago

访问任意一个节点的5000端口的服务:

ubuntu@swarm-manager:~/flask-redis$ curl 192.168.33.10:5000

<h1>Hello Container, I have been seen b'1' times.</h1>

ubuntu@swarm-manager:~/flask-redis$ curl 192.168.33.11:5000

<h1>Hello Container, I have been seen b'2' times.</h1>

ubuntu@swarm-manager:~/flask-redis$ curl 192.168.33.12:5000

<h1>Hello Container, I have been seen b'3' times.</h1>

删除服务:

ubuntu@swarm-manager:~/flask-redis$ docker stack rm web

Removing service web_web

Removing service web_redis

Removing network web_my-network

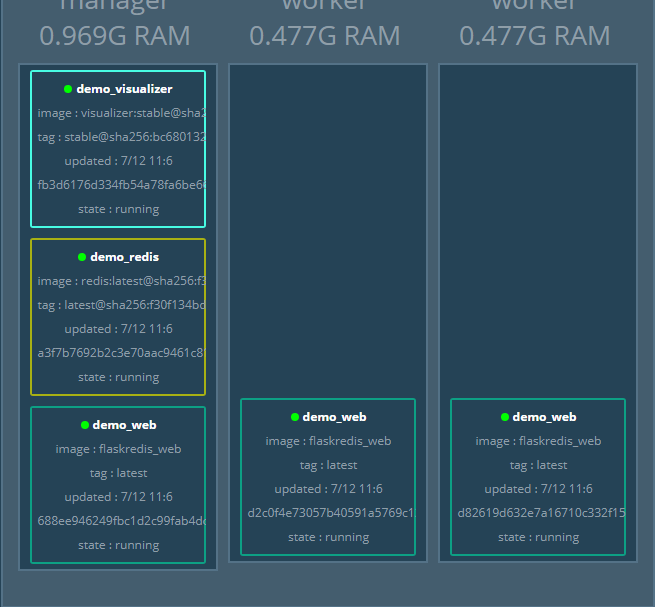

visualizer

visualizer是一个可以通过界面,查看Swarm集群的service,如为上面的docker-compose.yml添加一个visualizer service:

1 | visualizer: |

部署完服务后,可以通过访问manager节点的8080端口可以看到:

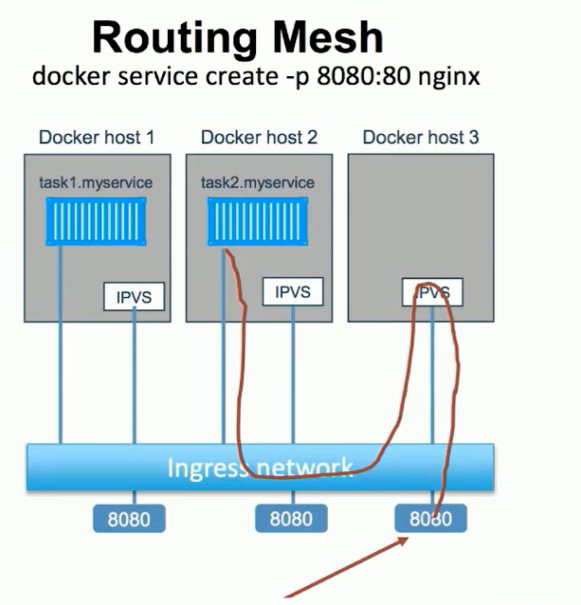

Routing Mesh

在一个Swarm集群中,外部请求Swarm集群中任意节点的某个服务时,Swarm通过Ingress将外部请求路由到不同主机的容器,从而实现了外部网络对service的访问。在容器之间的访问通过VIP(虚拟IP)实现,由LVS进行负载均衡。

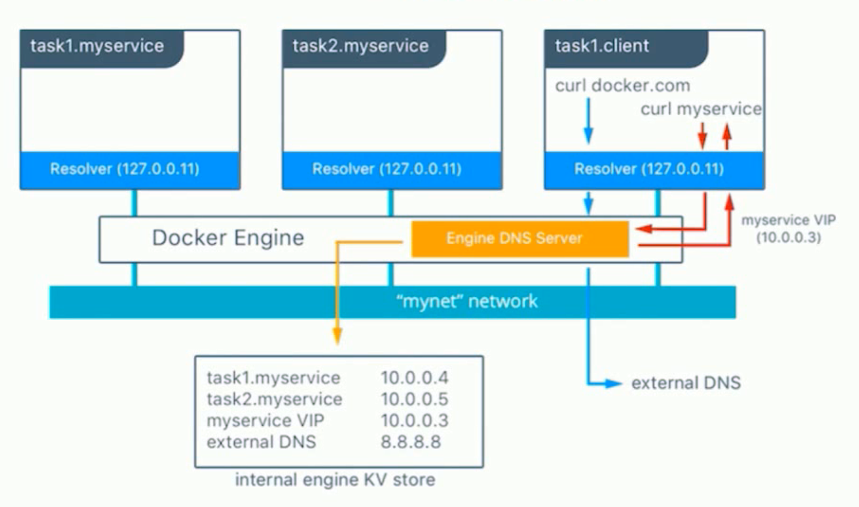

VIP

在一个Swarm集群中,当不同的容器在overlay网络中,创建一个service时,如下图创建一个myservice的服务,会在Docker的DNS的服务器增加一条DNS的记录,myservice 10.0.0.3,但是这条记录对应的IP地址,并不是容器的IP地址,而是一个VIP(虚拟IP),因为容器内的IP地址不是固定的,而VIP是固定的。

下图通过Swarm把myservice sacle成两个: task1.myservice、task2myservice,部署在两台不同的机器,容器内的IP地址为: 10.0.0.4、10.0.0.5,当请求myservice时,使用的VIP: 10.0.0.3,Swarm会通过LVS进行负载均衡把请求分配到10.0.0.4或10.0.0.5中:

如下,创建一个demo的overlay网络:

ubuntu@swarm-manager:~$ docker network create -d overlay demo

jw8a5qcul5pljzt09m3wkjgjk

使用jwilder/whoami的image,这个image可以返回容器的ID,创建一个whoami的service,连接到demo网络,可以看到whoami在swarm-worker1节点:

ubuntu@swarm-manager:~$ docker service create --name whoami -p 8000:8000 --network demo jwilder/whoami

o56u12e455yx4d22tnpes5jpn

ubuntu@swarm-manager:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

o56u12e455yx whoami replicated 1/1 jwilder/whoami:latest

ubuntu@swarm-manager:~$ docker service ps whoami

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xfi5wuypwuoc whoami.1 jwilder/whoami:latest swarm-worker1 Running Running 16 seconds ago

ubuntu@swarm-manager:~$ curl 127.0.0.1:8000

I'm e6560538dfce

创建一个centos的service,连接到demo网络,可以看到client运行在swarm-worker2节点:

ubuntu@swarm-manager:~$ docker service create --name centos --network demo centos sh -c "while true; do sleep 3600; done"

vk1a30yjef6954d8arbbfri8b

ubuntu@swarm-manager:~$ docker service ps centos

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

lgktuxe54ny9 centos.1 centos:latest swarm-worker2 Running Preparing 14 seconds ago

在swarm-worker2节点,进入容器,执行ping whoami,可以看到whoami对应的IP地址为10.0.0.2:

ubuntu@swarm-worker2:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9c6f9552e3a2 centos@sha256:67dad89757a55bfdfabec8abd0e22f8c7c12a1856514726470228063ed86593b "sh -c 'while true..." 16 seconds ago Up 16 seconds centos.1.lgktuxe54ny9kpktrod7zkmaw

ubuntu@swarm-worker2:~$

ubuntu@swarm-worker2:~$ docker exec -it 9c6f /bin/bash

[root@9c6f9552e3a2 /]# ping whoami

PING whoami (10.0.0.2) 56(84) bytes of data.

64 bytes from 10.0.0.2 (10.0.0.2): icmp_seq=1 ttl=64 time=0.240 ms

64 bytes from 10.0.0.2 (10.0.0.2): icmp_seq=2 ttl=64 time=0.135 ms

10.0.0.2并不是whoami对应容器的IP地址,而是一个VIP,如下将whoami水平扩展为2个,但是在swarm-worker2节点,执行ping whoami的时候,对应的IP地址还是10.0.0.2:

水平扩展whoami:

ubuntu@swarm-manager:~$ docker service scale whoami=2

whoami scaled to 2

ubuntu@swarm-manager:~$ docker service ps whoami

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xfi5wuypwuoc whoami.1 jwilder/whoami:latest swarm-worker1 Running Running 14 minutes ago

6nghi6p0i9gh whoami.2 jwilder/whoami:latest swarm-manager Running Running 8 seconds ago

在swarm-worker2节点,执行ping whoami:

[root@9c6f9552e3a2 /]# ping whoami

PING whoami (10.0.0.2) 56(84) bytes of data.

64 bytes from 10.0.0.2 (10.0.0.2): icmp_seq=1 ttl=64 time=0.097 ms

64 bytes from 10.0.0.2 (10.0.0.2): icmp_seq=2 ttl=64 time=0.138 ms

在swarm-worker2节点,执行nslookup whoami:

[root@9c6f9552e3a2 /]# nslookup whoami

Server: 127.0.0.11

Address: 127.0.0.11#53

Non-authoritative answer:

Name: whoami

Address: 10.0.0.2

可以看到whoami虽然水平扩展了2个,但是对应的IP地址还是10.0.0.2,这个地址对应的不是容器的地址,而是一个VIP。

在swarm-worker2节点,执行nslookup tasks.whoami,可以看到容器的真实的IP地址为10.0.0.6、10.0.0.3:

[root@9c6f9552e3a2 /]# nslookup tasks.whoami

Server: 127.0.0.11

Address: 127.0.0.11#53

Non-authoritative answer:

Name: tasks.whoami

Address: 10.0.0.6

Name: tasks.whoami

Address: 10.0.0.3

在swarm-manager和swarm-worker1节点上查询whoami容器的IP地址为10.0.0.6、10.0.0.3,对应的VIP为10.0.0.2:

ubuntu@swarm-manager:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

35e229a6a2bd jwilder/whoami@sha256:c621c699e1becc851a27716df9773fa9a3f6bccb331e6702330057a688fd1d5a "/app/http" 12 minutes ago Up 12 minutes 8000/tcp whoami.2.6nghi6p0i9gha04ol892roor2

ubuntu@swarm-manager:~$ docker exec 35e229a6a2bd ip a

61: eth0@if62: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP

link/ether 02:42:0a:00:00:06 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.6/24 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.2/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:aff:fe00:6/64 scope link

valid_lft forever preferred_lft forever

ubuntu@swarm-worker1:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e6560538dfce jwilder/whoami@sha256:c621c699e1becc851a27716df9773fa9a3f6bccb331e6702330057a688fd1d5a "/app/http" 28 minutes ago Up 28 minutes 8000/tcp whoami.1.xfi5wuypwuocwytmozixtvjg9

ubuntu@swarm-worker1:~$ docker exec e6560538dfce ip a

43: eth0@if44: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP

link/ether 02:42:0a:00:00:03 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.3/24 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.2/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:aff:fe00:3/64 scope link

valid_lft forever preferred_lft forever

VIP和容器的IP地址的关联是通过LVS实现的。

Ingress

Ingress可以为Swarm提供外部的负载均衡,当部署在Swarm集群的一个服务,服务的端口号被暴露在Swarm的各个节点,外部访问直接访问Swarm的任意一个节点,即可访问到对应的服务。

如图:当外部请求在Docker host3上请求web服务时,但是在Docker host3上并没有部署对应的服务,这时Swarm会通过Ingress将请求转化到Docker host1或Docker host2。Docker host1或Docker host2内部通过IPVS负载均衡访问到具体容器的服务:

如下,使用上面的whoami的例子,创建两个service,可以看到两个service部署在swarm-manager、swarm-worker1:

ubuntu@swarm-manager:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

o56u12e455yx whoami replicated 2/2 jwilder/whoami:latest

ubuntu@swarm-manager:~$ docker service ps whoami

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xfi5wuypwuoc whoami.1 jwilder/whoami:latest swarm-worker1 Running Running about an hour ago

6nghi6p0i9gh whoami.2 jwilder/whoami:latest swarm-manager Running Running 56 minutes ago

swarm-worker2节点虽然没有部署whoami的服务,但是访问也可以访问whoami的服务,这就是由Ingress实现:

ubuntu@swarm-worker2:~$ curl 127.0.0.1:8000

I'm 35e229a6a2bd

ubuntu@swarm-worker2:~$ curl 127.0.0.1:8000

I'm e6560538dfce

ubuntu@swarm-worker2:~$ curl 127.0.0.1:8000

I'm 35e229a6a2bd

如下,查看本地iptables的转发规则:

ubuntu@swarm-worker2:~$ sudo iptables -nL -t nat

Chain DOCKER-INGRESS (2 references)

target prot opt source destination

DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:8000 to:172.18.0.2:8000

RETURN all -- 0.0.0.0/0 0.0.0.0/0

可以看出,在DOCKER-INGRESS网络访问8000端口的服务会转发到172.18.0.2:8000

如下可以看出172.18.0.2是和docker_gwbridge网络是处于同一个network namespace的:

ubuntu@swarm-worker2:~$ ip a

5: docker_gwbridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ee:b8:d3:c6 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 scope global docker_gwbridge

valid_lft forever preferred_lft forever

inet6 fe80::42:eeff:feb8:d3c6/64 scope link

valid_lft forever preferred_lft forever

ubuntu@swarm-worker2:~$ docker network inspect docker_gwbridge

"Containers": {

"ingress-sbox": {

"Name": "gateway_ingress-sbox",

"EndpointID": "3961d073a92a60be853fcdf948928ba5aca50048df104d042983dae5f21b05e3",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": ""

}

},

进入ingress-sbox network namespace,执行ip a命令,可以看到172.18.0.2地址:

ubuntu@swarm-worker2:~$ sudo ls /var/run//images/docker/netns

1-m2k86yipxx ingress_sbox

ubuntu@swarm-worker2:~$ sudo nsenter --net=/var/run//images/docker/netns/ingress_sbox

root@swarm-worker2:~#

root@swarm-worker2:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 02:42:0a:ff:00:05 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.255.0.5/16 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:aff:feff:5/64 scope link

valid_lft forever preferred_lft forever

10: eth1@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 172.18.0.2/16 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:2/64 scope link

valid_lft forever preferred_lft forever

执行命令sudo apt-get install ipvsadm,安装ipvs的管理工具,在ingress-sbox执行如下命令:

root@swarm-worker2:~# iptables -nL -t mangle

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

MARK tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:8000 MARK set 0x116

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain FORWARD (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

MARK all -- 0.0.0.0/0 10.255.0.2 MARK set 0x116

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

root@swarm-worker2:~# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

FWM 278 rr

-> 10.255.0.6:0 Masq 1 0 0

-> 10.255.0.7:0 Masq 1 0 0

ingress_sbox将8000端口的数据MARK到了0x116(对应十进制278)、使用ipvsadm -l可以看到278对应的IP地址为: 10.255.0.6、10.255.0.7,这就是容器对应的IP地址:

ubuntu@swarm-manager:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

35e229a6a2bd jwilder/whoami@sha256:c621c699e1becc851a27716df9773fa9a3f6bccb331e6702330057a688fd1d5a "/app/http" About an hour ago Up About an hour 8000/tcp whoami.2.6nghi6p0i9gha04ol892roor2

ubuntu@swarm-manager:~$ docker exec 35e229a6a2bd ip a

56: eth2@if57: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP

link/ether 02:42:0a:ff:00:07 brd ff:ff:ff:ff:ff:ff

inet 10.255.0.7/16 scope global eth2

valid_lft forever preferred_lft forever

inet 10.255.0.2/32 scope global eth2

valid_lft forever preferred_lft forever

inet6 fe80::42:aff:feff:7/64 scope link

valid_lft forever preferred_lft forever

ubuntu@swarm-worker1:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e6560538dfce jwilder/whoami@sha256:c621c699e1becc851a27716df9773fa9a3f6bccb331e6702330057a688fd1d5a "/app/http" About an hour ago Up About an hour 8000/tcp whoami.1.xfi5wuypwuocwytmozixtvjg9

ubuntu@swarm-worker1:~$ docker exec e6560538dfce ip a

47: eth2@if48: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP

link/ether 02:42:0a:ff:00:06 brd ff:ff:ff:ff:ff:ff

inet 10.255.0.6/16 scope global eth2

valid_lft forever preferred_lft forever

inet 10.255.0.2/32 scope global eth2

valid_lft forever preferred_lft forever

inet6 fe80::42:aff:feff:6/64 scope link

valid_lft forever preferred_lft forever

Docker Secret

如果需要对一些敏感的信息,如用户名密码、数据库密码等,可以使用Docker Secret对数据进行加密。

Secret存储在Swarm的Manager节点中

Secret可以授权给一个service,这个service就可以使用这个Secret

创建Secret

创建Secret可以通过文件或标准输入。

通过文件创建

如下通过password文件创建一个my-pw的Secret

ubuntu@swarm-manager:~$ cat password

admin123

ubuntu@swarm-manager:~$ docker secret create my-pw password

q4gjv8wbevw3ep7lt6u8wvjtu

ubuntu@swarm-manager:~$ rm -rf password

通过标准输入创建

ubuntu@swarm-manager:~$ echo "admin123" | docker secret create my-pw2 -

jou51ev01lg9l6mngyqp4dg3c

查看Secret

ubuntu@swarm-manager:~$ docker secret ls

ID NAME CREATED UPDATED

jou51ev01lg9l6mngyqp4dg3c my-pw2 22 seconds ago 22 seconds ago

q4gjv8wbevw3ep7lt6u8wvjtu my-pw 4 minutes ago 4 minutes ago

删除Secret

ubuntu@swarm-manager:~$ docker secret rm my-pw2

jou51ev01lg9l6mngyqp4dg3c

ubuntu@swarm-manager:~$ docker secret ls

ID NAME CREATED UPDATED

q4gjv8wbevw3ep7lt6u8wvjtu my-pw 5 minutes ago 5 minutes ago

使用Secret

在创建service时,可以通过--secret指定需要使用的secret:

ubuntu@swarm-manager:~$ docker service create --name client --secret my-pw busybox sh -c "while true; do sleep 3600; done"

ic06w88supfz3e1ib5405fe22

ubuntu@swarm-manager:~$

ubuntu@swarm-manager:~$ docker service ps client

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

iqgj9261jpld client.1 busybox:latest swarm-worker1 Running Running 57 seconds ago

创建了上面的client service后,就可以使用指定的my-pw的secret:

ubuntu@swarm-worker1:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0a352c3b6b24 busybox@sha256:2a03a6059f21e150ae84b0973863609494aad70f0a80eaeb64bddd8d92465812 "sh -c 'while true..." 2 minutes ago Up 2 minutes client.1.iqgj9261jpldxx2yon0cuh5zs

ubuntu@swarm-worker1:~$ docker exec -it 0a35 sh

/ # cd /run/secrets/

/run/secrets # ls

my-pw

/run/secrets # cat my-pw

admin123

如下是在创建Mysql Service指定使用my-pw的secret,作为root的密码,输入密码admin123即可进入mysql:

ubuntu@swarm-manager:~$ docker service create --name db --secret my-pw -e MYSQL_ROOT_PASSWORD_FILE=/run/secrets/my-pw mysql

kbvk5o9x0igw72eb6qtrvxcd9

ubuntu@swarm-manager:~$ docker service ps db

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

5y5t18sf4qlf db.1 mysql:latest swarm-manager Running Running 3 minutes ago

ubuntu@swarm-manager:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

77d809bbccd0 mysql@sha256:811483efcd38de17d93193b4b4bc4ba290a931215c4c8512cbff624e5967a7dd "docker-entrypoint..." 3 minutes ago Up 3 minutes 3306/tcp, 33060/tcp db.1.5y5t18sf4qlfju69h0rmnh21g

ubuntu@swarm-manager:~$ docker exec -it 77d8 sh

# mysql -u root -p

Enter password:

Service Update

Docker Swarm支持滚动更新,在更新的过程中,总是有副本在运行的,可以保证了业务的连续性。

创建一个app.py的python程序:

1 | #!/usr/bin/env python3 |

使用如下Dockerfile:

1 | FROM python |

创建一个flask-demo:1.0的image:

ubuntu@swarm-manager:~/flask$ docker build -t flask-demo:1.0 .

将上面的version 1.0,改为version 2.0,创建一个flask-demo:2.0的image:

ubuntu@swarm-manager:~/flask$ docker build -t flask-demo:2.0 .

创建一个overlay的demo网络:

docker network create -d overlay demo

使用flask-demo:1.0创建一个service:

ubuntu@swarm-manager:~/flask$ docker service create --name web -p 5000:5000 --network demo flask-demo:1.0

ubuntu@swarm-manager:~/flask$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

lh4znay97v76 web.1 flask-demo:1.0 swarm-worker1 Running Running 2 seconds ago

如果要对service进行更新,需要保证service的个数在2个以上,才能保证业务不中断,如下将service sacle为2个:

ubuntu@swarm-manager:~/flask$ docker service scale web=2

ubuntu@swarm-manager:~/flask$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

lh4znay97v76 web.1 flask-demo:1.0 swarm-worker1 Running Running 3 minutes ago

tf101bckttcm web.2 flask-demo:1.0 swarm-worker2 Running Running less than a second ago

在worker1节点上,使用如下脚本,一直访问web的服务,在更新的过程中,看服务是否会中断:

ubuntu@swarm-worker1:~/flask$ sh -c "while true; do curl 127.0.0.1:5000 && sleep 1; done"

Hello Docker, version 1.0

使用flask-demo:2.0的image,更新web服务,等待一段时间,可以看到worker1节点访问的服务更新到了2.0:

ubuntu@swarm-manager:~/flask$ docker service update --image flask-demo:2.0 web

# worker1 节点

Hello Docker, version 2.0

查看service的状态,可以看到,flask-demo:1.0状态为Shutdown,flask-demo:2.0状态为Running:

ubuntu@swarm-manager:~/flask$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

xlb8qurxht5o web.1 flask-demo:2.0 swarm-worker2 Running Running 3 minutes ago

v0rhw47u1v3x \_ web.1 flask-demo:1.0 swarm-worker2 Shutdown Shutdown 3 minutes ago

uieqy7m9pxzn web.2 flask-demo:2.0 swarm-worker1 Running Running 3 minutes ago

siuaivldjfz6 \_ web.2 flask-demo:1.0 swarm-worker1 Shutdown Shutdown 3 minutes ago

当使用docker stack更新服务时,只需要改变docker-compose.yml文件,还是执行docker stack deploy命令,Swarm会根据docker-compose.yml文件变化,进行服务的更新。